Different implementations of a simple Collatz iterator and their performance

The Collatz conjecture was proposed by Lothar Collatz in 1937. The conjecture is also known as the 3n + 1 conjecture.

The procedure is that if n is divisible by two then divide by two, else multiply by 3 and add 1, iterate until n reaches 1. The unproven conjecture is that for all values of n the procedure will always reach 1. The number of iterations until n reaches 1 is called the delay.

The benchmark times the iteration of the 226 first values of n. The point of this exercise is not to make the fastest possible Collatz iterator but to compare different compilers and hardware using the same algorithm. Using a more efficient algorithm will give much better results than using faster hardware or better compilers.

Contents

Visual C++

Both Microsoft Visual C++ and Intel C++ were tested and they gave similar results.

Vista 64, 2.4 GHz core 2 Q6600

- 22.1s - 64 bit exe, one thread, unrolled 1000 times

- 30.5s - 64 bit exe, one thread, unrolled 10 times

- 45.9s - 32 bit exe, one thread, unrolled 1000 times

- 49.2s - 64 bit exe, one thread, not unrolled

- 53.3s - 32 bit exe, one thread, unrolled 10 times

- 76.1s - 32 bit exe, one thread, not unrolled

Vista 32, 2.66 GHz core 2 E6750

- 41.3 - 32 bit exe, one thread, unrolled 1000 times

- 47.7 - 32 bit exe, one thread, unrolled 10 times

- 65.6 - 32 bit exe, one thread, not unrolled

Visual Basic 2008

The code is compiled to common intermediate language that is made executable by the .net just in time compiler. The advantage is that the JIT compiler can optimize the code for the specific CPU at runtime. In this case the slower CPU with 64 bit registers is the fastest because of the optimization. The code should also run unmodified on Linux with Mono installed. The multithreaded version uses Microsoft Parallel Extensions to .NET Framework 3.5 and required almost no change to the program.

Vista 64, 2.4 GHz core 2 Q6600

- 55.4s - .net exe, one thread

- 11.3s - .net exe, four threads, unrolled 100 times

Vista 32, 2.66 GHz core 2 E6750

- 66.4s - .net exe, one thread

- 42.9s - .net exe, one thread, unrolled 100 times

- 33.6s - .net exe, two threads

- 22.9s - .net exe, two threads, unrolled 100 times

x86 assembly language

Because of the suspected low quality of 32 bit code generated by modern C++ compilers a hand crafted assembly version was made for comparison. The performance gain was made by keeping the value in a 64 bit MMX register and exploiting some statistical properties of the algorithm that is not obvious to a normal compiler.

Vista 32, 2.66 GHz core 2 E6750

- 38.5s - optimized, not unrolled

- 29.1s - optimized, unrolled 10 times

- 24.5s - optimized, unrolled 1000 times

CUDA

To check the quality of the compiler a handcrafted version was made in PTX assembly, it produced similar results.

GeForce 8800 GTS 512 MB (G92) 1.625 GHz

- 2.07s - 128 blocks x 256 threads

BSGP

The Bulk-Synchronous GPU Programming compiler is highly advanced and is capable of impressive results but the documentation is severely lacking and the future of the compiler seems unclear. Because of the lack of documentation the program will not display all delay records but it still calculates them.

GeForce 8800 GTS 512 MB (G92) 1.625 GHz

- 2.04s - 32768 threads

Conclusions

CPU performance

The difference between the slowest and the fastest CPU program was more than 340%. It was very difficult to understand what caused the difference and how to get the best result. Unrolling a loop 1000 times to reach maximum performance is counter intuitive and disruptive. The hand crafted assembly version showed that the compilers did not make optimal code for 32 bit CPUs. Creating an optimal assembly program requires detailed knowledge of the inner workings of the CPU and is extremely time consuming since the optimizing reference manual is more than 500 pages long and many of the "rules" described there are in conflict with each other.

Visual Basic did in some cases perform just as well as C++ and should not automatically be dismissed as a language for performance computing, the same goes for the other .net languages.

Branch prediction and unrolling

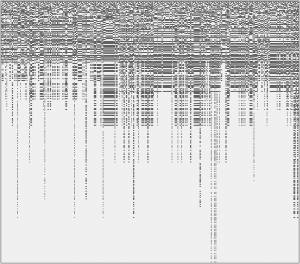

One of the largest surprises was that it helped to unroll the loop up to 1000 times. When calculating the delay for a given n, a sequence of numbers is generated. A branch is taken or not taken based on if the current number in the sequence is odd or even. The graph shows odd numbers in the sequence as black pixels and so shows which code path is taken at a given iteration when calculating a given n.

It turns out that even if there is little correlation between following iterations of the loop there is a strong correlation between the same iteration in the calculation of other values of n, sometimes reaching back hundreds of n. The branch pattern graph shows this as horizontal lines. Unrolling a loop completely effectively gives each iteration of the loop its own private branch prediction history and the CPU is capable of predicting the branches much more efficiently, resulting in more than twice the execution speed. Unrolling the loop changes branch prediction from following vertical lines to horizontal lines on the graph.

GPU performance

All three versions gave almost identical results, which suggests that it is easy to achieve consistently good results if the algorithm and the hardware are well understood. The fastest single core CPU version ran at 22.1s while the fastest GPU version ran at 2.04s, a speedup of 10.83x on the GPU. The slight disappointment is that the GPU with 112 cores running at half the CPU frequency did not achieve 112 / 2 = 56x speedup but "only" 10.83x. The same code running on four CPU cores would reduce the advantage to 10.83x / 4 = 2.70x. It should be noted that this compares a 64 bit machine (the CPU) against a 32 bit machine (the GPU), emulating 64 bit. This does not mean that the GPU is disappointingly slow, it means that it is better at some things than other, just like a CPU. A different algorithm or different datatypes can give completely different results.